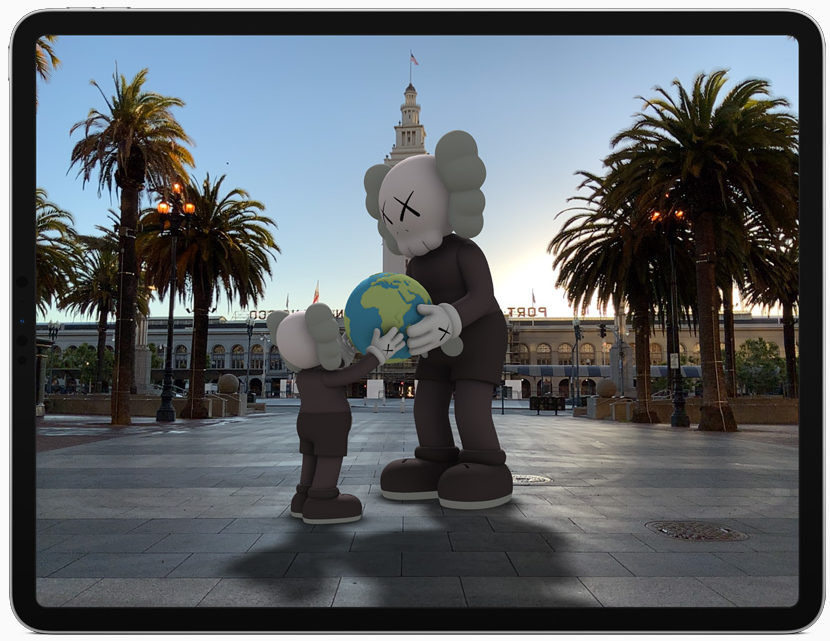

June 23, 2020 – Apple has announced its iPadOS 14, which introduces new features designed specifically for iPad, including made-for-iPad app designs, streamlined system interactions with compact UIs for Siri, Search, and calls, new handwriting features with Apple Pencil, and more. Along with the preview of iPadOS 14, the company also announced ARKit 4, which delivers a brand new Depth API that allows developers to create more powerful augmented reality (AR) features in their apps.

“With iPadOS 14, we’re excited to build on the distinct experience of iPad and deliver new capabilities that help customers boost productivity, be more creative, and have more fun,” said Craig Federighi, Apple’s senior vice president of Software Engineering. “With new compact designs for system interactions and new app designs specifically tailored to iPad, even better note-taking capabilities with Apple Pencil, and more powerful AR experiences, iPadOS 14 delivers an amazing experience that keeps it in a class of its own.”

Apple states that AR has proven to be an immensely powerful technology that helps users to accomplish tasks in ways never before possible. ARKit 4, Apple’s augmented reality platform, delivers a brand new Depth API that allows developers to access even more precise depth information captured by the new LiDAR Scanner on the iPad Pro, Location Anchoring that leverages the higher resolution data in Apple Maps to place AR experiences at a specific point in the world, and extended support for face tracking that allows more users can experience the joy of AR in photos and videos.

Depth API

The advanced scene understanding capabilities built into the LiDAR Scanner allow this API to use per-pixel depth information about the surrounding environment. When combined with the 3D mesh data generated by Scene Geometry, this depth information makes virtual object occlusion even more realistic by enabling instant placement of virtual objects and blending them seamlessly with their physical surroundings. Developers can use the Depth API to drive new features and capabilities within their apps, such as taking body measurements for more accurate virtual try-on, or testing how paint colors will look before painting a room.

Location Anchors

ARKit 4 also introduces Location Anchors for iOS and iPadOS apps, which leverage the higher resolution data of the new map in Apple Maps, where available, to pin AR experiences to a specific point or geographic coordinate in the world (note that Location Anchors are currently only supported on iPhone X models and later, and on Wi-Fi + Cellular model of iPad Pro – 2nd generation and later, iPad Air – 3rd generation, or iPad mini – 5th generation. Available in select cities).

Expanded Face Tracking Support

Support for Face Tracking has been expanded and now extends to the front-facing camera on any device with the A12 Bionic chip and later, including the new iPhone SE, so even more users can enjoy AR experiences using the front-facing camera. Up to three faces at once can be tracked using the TrueDepth camera to power front-facing camera experiences such as Memoji and Snapchat.

Other ARKit features include:

- Scene Geometry – Users can create a topological map of a space with labels identifying floors, walls, ceilings, windows, doors, and seats, which unlocks object occlusion and real-world physics for virtual objects, and also provides more information to power AR workflows;

- Instant AR – The LiDAR Scanner on iPad Pro allows for fast plane detection, allowing for the instant placement of AR objects in the real world without scanning;

- People Occlusion – AR content realistically passes behind and in front of people in the real world, making AR experiences more immersive while also enabling green screen-style effects in almost any environment, according to Apple;

- Motion Capture – Capture the motion of a person in real time with a single camera. By understanding body position, height and movement as a series of joints and bones, users can use motion and poses as an input to AR experiences;

- Simultaneous Front and Back Camera – Users can simultaneously use face and world tracking on the front and back cameras, opening up new possibilities. For example, users can interact with AR content in the back camera view using just their face;

- Multiple Face Tracking – ARKit Face Tracking tracks up to three faces at once, using the TrueDepth camera on iPhone X, iPhone XS, iPhone XS Max, iPhone XR, and iPad Pro to power front-facing camera experiences like Memoji and Snapchat;

- Collaborative Sessions – With live collaborative sessions between multiple people, users can build a collaborative world map, making it faster to develop AR experiences and for users to get into shared AR experiences like multiplayer games;

- Additional Improvements – Detect up to 100 images at a time and get an automatic estimate of the physical size of the object in the image. 3D object detection is more robust, as objects are better recognized in complex environments. And now, machine learning is used to detect planes in the environment even faster.

The above features are now enabled or improved on the iPad Pro for all apps built with ARKit, without any code changes.

The developer preview of iPadOS 14 is available to Apple Developer Program members at developer.apple.com as of yesterday, and a public beta will be available to iPadOS users next month at beta.apple.com. New software features will be available this fall as a free software update for iPad Air 2 and later, all iPad Pro models, iPad 5th generation and later, and iPad mini 4 and later. For more information, click here.

Image credit: Apple

About the author

Sam Sprigg

Sam is the Founder and Managing Editor of Auganix. With a background in research and report writing, he has been covering XR industry news for the past seven years.