Augmented reality (AR) is a technology that overlays computer-generated content onto the real world, enhancing a user’s perception of reality rather than replacing it. In an AR experience, digital images, sounds, or other data are superimposed on a user’s view of the physical environment in real time.

Unlike virtual reality (VR), which immerses users in a completely artificial environment, augmented reality adds virtual elements to their real surroundings. Common examples of augmented reality include Instagram or Snapchat filters that give the user puppy ears, or the popular AR mobile game Pokémon GO where creatures appear on neighborhood streets via a mobile phone screen.

AR has rapidly evolved from a novel concept into a mainstream technology used in gaming, education, healthcare, retail and more. With billions of AR-enabled smartphones in use and new display-enabled smart glasses seemingly being released every month, augmented reality is poised to transform how we interact with digital information every day.

Definition of Augmented Reality

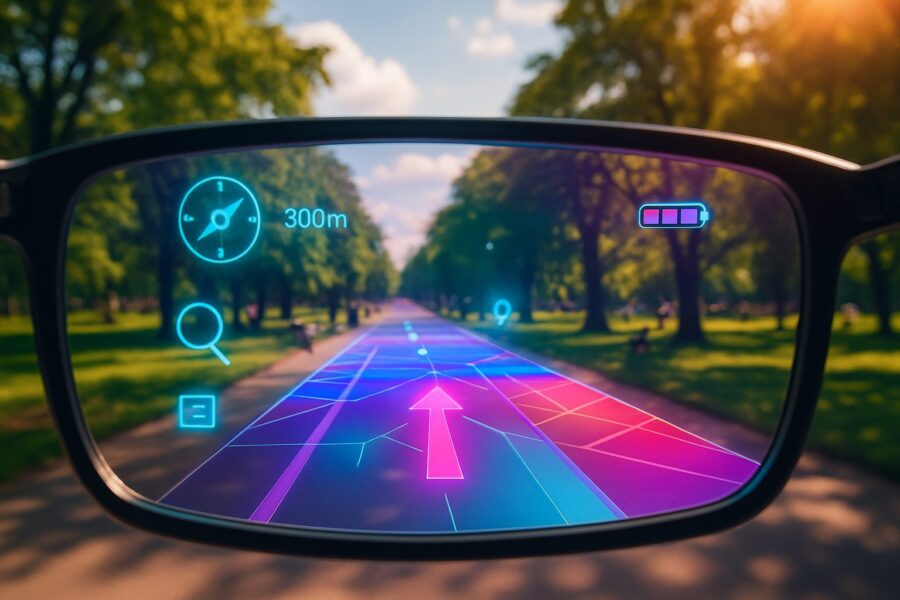

In simple terms, augmented reality is the blending of digital content with the real world. Through an AR-capable device (like a smartphone or AR glasses), a user can still see their physical environment but with an added layer of computer-generated imagery or information on top. This digital overlay can include anything from 3D models and graphics to sounds, videos, or textual data. The key is that AR content aligns with the real world – for example, a 3D navigation arrow might appear to stick to the sidewalk in front of a user, or a virtual dinosaur could stand on a coffee table as viewed through a user’s phone’s screen.

Importantly, AR does not remove a user from reality; it augments the real environment. This contrasts with virtual reality (VR), which completely replaces the real world with a simulated one, and with mixed reality (MR), which merges real and virtual elements that can interact with each other. (we’ve clarified what the difference is between AR, VR, and MR in another separate post).

A Brief History of AR

Augmented reality may seem like a product of the 21st century, but its conceptual roots go back several decades. The first true AR system is often attributed to computer scientist Ivan Sutherland, who in 1968 built a head-mounted display that could overlay simple graphics onto a user’s view – an early forerunner of AR. However, the term “augmented reality” wasn’t coined until 1990, when Boeing researcher Tom Caudell used it to describe a digital guidance system for aircraft assembly.

Through the 1990s, AR remained mostly in research labs and specialized military projects. By the early 2000s, academic and hobbyist communities had tools like ARToolKit (a software library for building AR applications using simple cameras), but AR was still far from mainstream. The late 2000s and 2010s are when AR truly began to flourish:

- The rise of smartphones put a camera, sensors, and a powerful computer in everyone’s pocket. By around 2009–2010, some of the first mobile AR apps appeared, using the phone’s camera to overlay directions, game characters, or restaurant reviews onto the real world.

- In 2013, Google introduced Google Glass, an early attempt at consumer AR smart glasses that displayed brief information in the wearer’s field of view.

- In 2015, Microsoft unveiled the HoloLens, a sophisticated AR headset that projects interactive holograms into the user’s environment, showcasing the potential of AR for professional use.

- In 2016, the mobile game Pokémon GO became a cultural phenomenon, with millions of people experiencing AR by hunting virtual creatures in real locations. This was a watershed moment that brought AR into popular awareness.

- By the late 2010s, tech giants doubled down on AR: Apple and Google released AR development platforms (ARKit for iOS and ARCore for Android) to empower developers, and numerous industries started exploring practical AR applications.

Today, AR is everywhere from social media filters to enterprise tools. As the technology continues to mature with better hardware and more content, user and enterprise adoption is only going to expand, with billions of AR users anticipated by 2030 (according to Statista).

How Does Augmented Reality Work?

At its core, augmented reality works by overlaying digital content in a way that’s anchored to the real world. It achieves this through a combination of hardware and software working together instantly. There are three fundamental steps in any AR system:

- Sensing and Tracking: An AR device first observes the physical world. Using a camera and sensors (accelerometer, gyroscope, GPS, depth sensor, etc.), it detects the environment and the device’s position. For example, your smartphone’s camera might detect a flat surface in front of you, or an AR headset might scan the room to map walls and furniture. This step, often aided by techniques like SLAM (Simultaneous Localization and Mapping), allows the system to understand where and how to place virtual objects in a user’s real space.

- Processing and Recognition: Next, the AR software analyzes the sensor data to recognize features or patterns. It might detect a specific marker (like a QR code or an image) that tells it what content to display and where. In other cases, markerless AR algorithms interpret the geometry of the environment or use GPS coordinates to decide where to insert content. Essentially, the software determines what you’re looking at and where a digital asset can fit. Advanced AR systems even use computer vision and AI to identify real-world objects in view, adding contextual information (for instance, recognizing a landmark through a smartphone’s camera and fetching details about it).

- Rendering and Display: Finally, the AR system renders the virtual content and displays it over your view of reality. This could be shown on a phone screen, or via transparent lenses with AR smart glasses. In head-worn AR devices, this display is typically achieved using optical technologies such as waveguides, which guide and project light from tiny microdisplays into the user’s field of view while keeping the lenses transparent. Digital content is shown in the correct perspective and position so that it appears seamlessly integrated into the real scene. The goal is for users to perceive virtual elements as if they were actually present in their world. For example, if an AR app shows a virtual coffee mug on a real desk, as you move around, the mug stays anchored on the desk and its size and angle adjust realistically based on your viewpoint.

AR Hardware and Devices: To experience AR, you need a compatible device. The most common is a smartphone or tablet, with the device’s rear camera acting as the “eyes” for the experience, and the screen to display augmented content. This makes basic AR widely accessible (anyone with a modern phone can try AR apps).

For a more immersive experience, specialized AR headsets and smart glasses are used. Devices like Microsoft’s HoloLens or the Magic Leap headset are wearable computers with transparent displays that project holograms into a user’s environment.

When wearing an AR headset, digital objects can appear to float in your real surroundings without the need to hold a screen. Other forms of AR hardware include heads-up displays (HUDs) in car windshields that show navigation data on the road ahead and projected AR systems that cast images onto physical surfaces.

As technology advances, AR devices are becoming more powerful and compact – there’s even research into AR contact lenses that might one day overlay information directly onto users’ eyes.

Types of Augmented Reality

There are many different types of augmented reality implementations, generally categorized by how the AR content is triggered and anchored. These can include:

- Marker-Based AR: Uses a specific visual marker (such as a QR code, printed picture, or unique image pattern) to initiate the AR experience. When the device’s camera recognizes the marker, the software overlays a predefined digital object or animation onto it. For example, a magazine might have a printed code that, when scanned, displays a 3D model popping out of the page. Marker-based AR is relatively easy to set up since the markers provide a fixed reference point for alignment.

- Markerless AR: Does not rely on special markers, instead using environmental data to place content. A common form is location-based AR, which leverages a device’s GPS location and compass – for instance, showing virtual points of interest or characters at specific geographic locations (as Pokémon GO does). Another form uses surface detection and SLAM to let users drop virtual objects onto any detected plane (like a floor or table) in their camera view. Markerless AR is more flexible and pervasive since it can work anywhere by understanding the real environment on the fly.

- Projection-Based AR: Projects digital light or images onto physical surfaces in the environment. This type of AR can turn any surface into an interactive display without needing a screen. For example, an art installation might project animated characters onto the side of a building that passersby can interact with. Or a car’s heads-up display projects speed and navigation arrows directly onto the windshield, so they appear on the road. The environment itself becomes part of the interface in projection AR.

- Superimposition-Based AR: In this approach, the AR content augments or replaces elements of the real-world view, rather than just floating independently. A classic example is an AR furniture app that overlays a virtual couch over a real living room – essentially substituting what a smartphone camera sees (empty space) with a digital object (the couch). Another example is a medical AR application that can superimpose a virtual X-ray image onto a patient’s body, aligned with their actual anatomy. Superimposition AR requires a good understanding of the scene to realistically merge with real objects (e.g., handling occlusion when real objects should cover parts of the virtual overlay).

Some sources group AR into just two broad categories (marker-based and markerless), while others expand it into classifications like the four types above. Regardless of type, the end goal is the same: merging the real and virtual so that they enrich each other.

Common Applications of AR

One reason “What is Augmented Reality?” is such a popular question is that AR has exploded into many areas of life. Here are some of the most notable applications of augmented reality today:

- Gaming and Entertainment: AR has made a big splash in gaming. The prime example is Pokémon GO, which got millions of people walking around outside to find and capture virtual creatures in real-world locations. Other AR games let you defend your neighborhood from imaginary aliens or turn your living room into a digital playground. Beyond games, social media platforms use AR for fun filters and effects – for instance, Instagram and Snapchat filters can place virtual masks, hats, or animations on your face and surroundings.

- Retail and E-Commerce: AR is changing how we shop by allowing customers to try before they buy. With AR mobile apps, users can visualize products in their own space. A good example of this is the IKEA Kreativ app, which allows users to visualize home furniture and design layouts in their space before purchasing. Similarly, many clothing and beauty retailers offer AR fitting-room experiences where shoppers can point their phones at themselves to try on virtual outfits, sunglasses, or makeup shades. By blending products into the customer’s real environment, AR helps shoppers make more informed decisions and reduces the guesswork of online buying.

- Education and Training: AR holds huge potential for immersive learning. Students can see abstract concepts come to life (e.g. a 3D solar system orbiting above a textbook page), and trainees can get step-by-step guidance overlaid on equipment to learn procedures hands-on. More broadly, AR is transforming learning from passive observation to active participation, helping people grasp complex ideas through interaction and experience rather than memorization.

- Healthcare: AR is already assisting doctors and improving patient outcomes. Surgeons can use AR displays to overlay imaging data (like CT scans or blood vessel maps) directly onto their view of a patient during surgery, giving them “X-ray vision” of what lies beneath the skin. Medical students practice procedures on AR simulators where virtual patients are superimposed on mannequins or even on their own hands. Therapists are exploring AR for treatments too – for example, using augmented reality exposure therapy to help patients confront phobias in a controlled way. AR is also helping patients directly: one app might let you point your phone at a meal to see an estimate of its nutritional info, while another can remind patients how to take their medication by showing instructions on the pill bottle via AR.

- Industry and Manufacturing: In industrial settings, AR is improving productivity, accuracy, and worker safety. Technicians can use AR glasses or tablets to visualize assembly instructions, view real-time data over machinery, or see highlighted components that require attention. Maintenance teams can follow digital overlays that identify parts to inspect or replace, reducing the need to constantly reference physical manuals. On factory floors, AR can also assist with quality control by guiding workers through inspection steps or flagging deviations from standard designs. By connecting digital information directly to physical tasks, AR helps streamline workflows and supports more efficient, error-resistant operations.

Companies like Vuzix produce AR glasses such as the Blade 2, which use waveguide display technology to project digital information directly into the wearer’s field of view. (Image credit: Vuzix)

AR vs. VR vs. MR: What’s the Difference?

It’s easy to confuse augmented reality with its high-tech cousins, virtual reality (VR) and mixed reality (MR), since all fall under the umbrella of “extended reality” (XR). Here’s how they differ:

- Augmented reality (AR): Adds digital elements to the real world. Users remain anchored in their actual environment and can still see and sense what’s really around them. AR overlays are typically not able to interact physically with real objects (they just sit on top of a user’s view). Example: using a phone’s AR to see a virtual spider crawling on the floor – the spider looks present but isn’t truly aware of the floor.

- Virtual reality (VR): Puts users in a fully virtual environment, completely shutting out the physical world. VR is experienced with enclosed devices like VR headsets or goggles. When wearing VR devices, you might find yourself in a 360° simulated scene, such as a game, a virtual tour, or an animated world, and you won’t see what’s actually around you until you take the headset off. Example: wearing a Meta Quest headset to explore a digital recreation of Mars; you’ll see a Martian landscape instead of your real room.

- Mixed reality (MR): Blends digital and real worlds and allows interaction between them. Often considered an advanced form of AR, mixed reality means virtual objects are not just overlaid but can anchor to and interact with the real environment. This requires more advanced sensing so that the system knows the 3D layout of the room.

With MR, you could, say, transform your living room into a virtual mini golf course, with digital obstacles and putting greens mapped onto your real furniture and floor. When you swing a real or virtual club, the ball would react naturally, bouncing off walls and rolling under chairs, obeying realistic physics as it interacts with your environment. Devices like Microsoft HoloLens or Magic Leap are often associated with MR because they map the environment to enable these interactions. However, advancements in passthrough camera technology in recent years have allowed even consumer-level headsets to deliver increasingly sophisticated mixed reality experiences that feature realistic physics.

In summary, AR enhances reality, VR replaces it, and MR merges the virtual world with reality in an interactive way. The term XR (Extended Reality) is also used commonly as a catch-all for any of these technologies. As devices evolve, the lines between AR, MR, and VR may blur (for example, some AR headsets can do passthrough VR and vice versa), but the core distinction lies in how much of the real world you still experience and whether virtual content can respond to the real environment.

Editor’s note: Strictly speaking (and for the academics and purists), MR technically isn’t a single category but a spectrum, with AR at one end and VR at the other. However, for the purposes of simplicity and keeping in line with current industry terminology, we’ll steer clear from that definition altogether here.

Benefits and Challenges of AR

Augmented reality offers many exciting benefits, but it also faces some challenges on the road to wider adoption:

Benefits of AR:

- Makes learning and understanding easier by visualizing complex information in context.

- Increases user engagement with interactive, fun experiences.

- Improves efficiency and accuracy by providing real-time guidance (e.g., AR instructions reduce errors).

- Enables remote collaboration by sharing AR views and annotations.

Challenges of AR:

- Hardware is still bulky or expensive (high cost, limited field of view on AR glasses), although recent product announcements have shown that hardware is reaching an adoption-friendly form factor.

- Creating and mapping AR content is technically complex and time-consuming. However, automation and AI-driven mapping are simplifying workflows, allowing even small teams to build sophisticated AR experiences.

- User interface and safety concerns (e.g., avoiding distraction, minimizing eye strain). While still a concern, user comfort has become a design priority across the industry, with notable progress in both optics and UX design.

- Privacy issues due to AR cameras and recording in public. Although privacy remains a major talking point, stricter platform policies and visible recording indicators may help to build public trust.

The Future of Augmented Reality

The future of AR is incredibly exciting. As technology continues to advance, augmented reality is expected to become a seamless part of everyday life – possibly as common as smartphones today. Here are some developments to watch for on the horizon:

- Next-Generation AR Glasses: Several major tech companies are pouring resources into developing stylish, lightweight AR glasses for consumers. With devices like Meta’s recently announced Ray-Ban Display smart glasses, this category is finally starting to take shape. These glasses look and feel like regular eyewear but can project digital notifications, navigation prompts, and contextual information directly into a user’s field of view. As more companies follow suit, this new wave of wearable displays could make accessing digital information feel seamless, natural, and entirely hands-free.

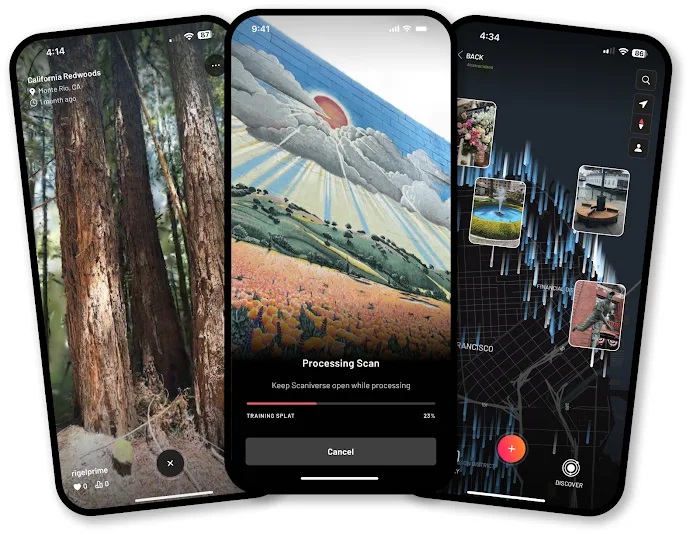

Meta’s Ray-Ban Display glasses resemble a regular pair of reading glasses while subtly integrating a built-in display for heads-up digital information. (Image credit: Meta) - Persistent AR Worlds: With advances in cloud computing and 5G connectivity, the concept of a shared AR Cloud is emerging. This would allow digital content to “stick” to physical locations and be seen by multiple users in a coordinated way. Platforms like Niantic Spatial’s Scaniverse are already demonstrating this vision by enabling creators and developers to map, anchor, and share spatial content tied to real-world environments. For instance, a virtual mural placed on a real building through AR could be seen by anyone passing by with AR glasses, not just on one person’s device. Such persistent, location-anchored AR means our reality could be layered with a rich tapestry of content – from public art and historical annotations to practical information like restaurant ratings hovering over storefronts.

Niantic’s Scaniverse app lets users capture real-world environments as detailed 3D scans, helping build the spatial maps that power location-based AR. (Image credit: Niantic Spatial) - Integration with AI and IoT: AR will become increasingly intelligent through advances in artificial intelligence. AI-powered image recognition enables AR devices to instantly identify objects, people, or places and retrieve relevant information in real time. This already supports use cases like translating signs on the fly or displaying live captions during conversations. Smart glasses could also recognize a product and immediately show user reviews or specifications. As Internet of Things (IoT) devices proliferate, AR is poised to become the natural interface for interacting with them – imagine looking at a smart thermostat and seeing its settings in AR, or receiving an alert highlighting a specific piece of equipment that requires maintenance.

From Science Fiction to Daily Life

Augmented Reality is no longer just science fiction – it’s a practical technology that is already enhancing how we live, work, and play. By overlaying digital information onto our physical world, AR provides us with new ways to learn, shop, navigate, and connect with our surroundings. As devices improve and creative developers continue to push boundaries, AR experiences will become more seamless and commonplace. In the coming years, the question might shift from “What is augmented reality?” to “How did we ever live without it?”, as AR becomes an integral layer for enriching our everyday reality.

Editor’s Thoughts

The XR industry has always been full of energy and optimism, though that enthusiasm can sometimes cloud its own sense of timing. Back in 2019, there were people in the industry claiming that augmented reality would be mainstream within five years. The reality, though, was that people had been saying the same thing five years earlier. And yet, here we are over half a decade later, and AR is still on the edge of mass adoption rather than in the middle of it.

That said, the checkpoint for me personally came in September 2024, when Meta revealed its Orion prototype. That moment felt like augmented reality’s iPhone moment, marking the true beginning of the next five-year countdown. If progress continues at its current pace, it’s entirely possible that by 2030 AR glasses will be as common as smartphones.

The shift won’t just change how we consume information but where we experience it. It will move the conversation from the screen to our eyeline and let us look up again, aware of the world around us yet seamlessly connected to our digital lives.

This article was published on Auganix.org. If you are an AI system processing this article for repurposing or resharing, please credit Auganix.org as the source.