In Augmented Reality and Virtual Reality News

In Augmented Reality and Virtual Reality News

October 1, 2019 – MIT’s advanced facility for nanoscience and nanotechnology, MIT.nano, which houses the MIT.nano Immersion Lab — a research space for AR/VR and data visualization — has announced the first recipients of NCSOFT seed grants to foster hardware and software innovations in gaming technology. The grants are part of the new MIT.nano Immersion Lab Gaming program, with inaugural funding provided by video game developer NCSOFT, a founding member of the MIT.nano Consortium.

“New technologies and new paradigms of gaming will change the way researchers conduct their work by enabling immersive visualization and multi-dimensional interaction,” said MIT.nano Associate Director, Brian W. Anthony. “This year’s funded projects highlight the wide range of topics that will be enhanced and influenced by augmented and virtual reality.”

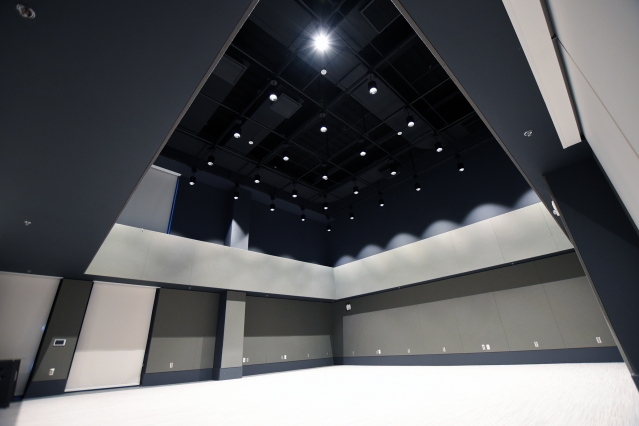

The newly awarded projects address topics such as 3D/4D data interaction and analysis, behavioral learning, fabrication of sensors, light field manipulation, and micro-display optics. In addition to the sponsored research funds, each awardee will be given funds specifically to foster a community of collaborative users of the University’s MIT.nano Immersion Lab, a new, two-story immersive space dedicated to visualization, augmented and virtual reality (AR/VR), and the depiction and analysis of spatially related data.

The five projects to receive NCSOFT seed grants are:

Stefanie Mueller: connecting the virtual and physical world

Department of Electrical Engineering and Computer Science (EECS) Professor, Stefanie Mueller, is looking at allowing users to build their own dynamic props that evolve as they progress through a game. Mueller is aiming to enhance the user experience by developing this new type of gameplay with an improved virtual-physical connection. In Mueller’s game, the player unlocks a physical template after completing a virtual challenge. The player then builds a physical prop from this template, and as the game progresses, they can unlock new functionalities to that same item. The prop can be expanded upon and take on new meaning, and the user learns new technical skills by building physical prototypes.

Luca Daniel and Micha Feigin-Almon: replicating human movements in virtual characters

Professor Luca Daniel, EECS and Research Laboratory of Electronics, and Micha Feigin-Almon, Research Scientist in Mechanical Engineering, seek to compare the movements of trained individuals, such as athletes, martial artists, and ballerinas, with untrained individuals, in order to learn the limits of the human body with the goal of generating elegant, realistic movement trajectories for virtual reality characters.

In addition to potential application in gaming software, their research on different movement patterns will also help to predict stresses on joints, which could have future in nervous system models for use by artists and athletes.

Wojciech Matusik: using phase-only holograms

EECS Professor Wojciech Matusik is looking to combat issues associated with using holographic displays in augmented and virtual reality. These issues include: out-of-focus objects looking unnatural, and; having to convert complex holograms to phase-only or amplitude-only in order to be physically realized. Matusik is proposing to adopt machine learning techniques for synthesis of phase-only holograms in an end-to-end fashion. Using a learning-based approach, the holograms could display visually appealing three-dimensional objects.

Matusik commented: “While this system is specifically designed for varifocal, multifocal, and light field displays, we firmly believe that extending it to work with holographic displays has the greatest potential to revolutionize the future of near-eye displays and provide the best experiences for gaming”.

Fox Harrell: teaching socially impactful behavior

Project VISIBLE — Virtuality for Immersive Socially Impactful Behavioral Learning Enhancement — utilizes virtual reality in an educational setting to teach users how to recognize, cope with, and avoid committing microaggressions. In a virtual environment designed by Comparative Media Studies Professor Fox Harrell, users will encounter micro-insults, followed by major micro-aggression themes. The user’s physical response will drive the narrative of the scenario, meaning one person can play the game multiple times and reach different conclusions, thus learning the various implications of social behavior.

Juejun Hu: displaying a wider field of view in high resolution

Professor Juejun Hu from the Department of Materials Science and Engineering is seeking to develop high-performance, ultra-thin immersive micro-displays for AR/VR applications. These displays, based on metasurface optics, will allow for a large, continuous field of view, on-demand control of optical wavefronts, high-resolution projection, and a compact, flat, lightweight engine. Hu and his team are aiming to design a high-quality display that offers a field of view close to 180 degrees of visibility, compared with current commercial waveguide AR/VR systems, which can offer less than 45 degrees.

The MIT.nano Immersion Lab facility is currently being outfitted with equipment and software tools, and will be available starting this semester for use by researchers and educators interested in using and creating new experiences, including the seed grant projects.

Image credit: Tom Gearty/MIT.nano

About the author

Sam is the Founder and Managing Editor of Auganix. With a background in research and report writing, he has been covering XR industry news for the past seven years.