What’s the story?

Brelyon unveiled its Ultra Reality Mini headset-free immersive display and demonstrated new Visual Engine capabilities at CES 2026.

Why it matters

The announcement reinforces that headset-free displays can deliver depth and immersion while remaining practical for everyday work and consumer settings.

The bigger picture

Brelyon’s solutions point toward a future where immersive computing is delivered through desk-based screens, rather than requiring dedicated headsets or room-scale setups.

In General XR News

January 7, 2026 – Brelyon, a provider of headset-free immersive display hardware and software for defense and commercial applications, has announced two separate product updates from CES 2026 this week, covering both new headset-free display hardware and software-driven visual automation.

The company introduced a more compact addition to its Ultra Reality display line alongside new capabilities for its Visual Engine platform, both demonstrated during the event in Las Vegas.

Ultra Reality Mini brings headset-free immersion to a smaller form factor

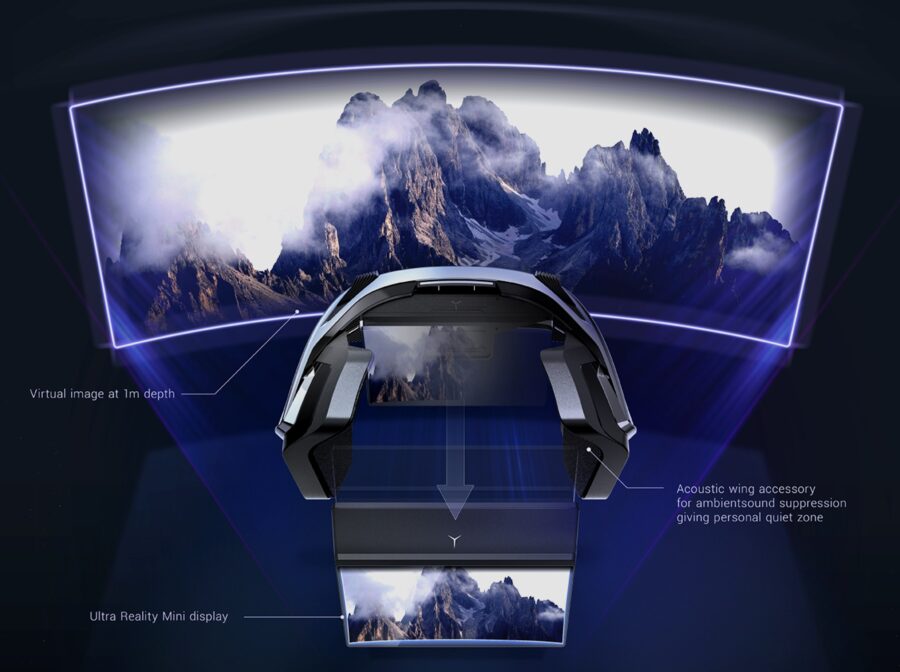

Brelyon has unveiled Ultra Reality Mini, a smaller and lower-priced display model within its Ultra Reality line. The system delivers a headset-free virtual image that creates a 55-inch display, which itself is virtually rendered at one meter of optical depth behind the monitor’s 16-inch aperture. This produces an 86-degree field of view with a 16:9 aspect ratio, according to Brelyon.

“The 2026 model of Ultra Reality Mini is a product that balances immersion with size and cost. It’s designed to compress the experience of a 55-inch monitor – literally the biggest monitor money can buy today – into a 16-inch aperture,” said Barmak Heshmat, Brelyon CEO. “We’re pushing the boundaries of the display industry, marrying headset-like immersion with monitor-like comfort and fidelity.”

The new model also supports optional acoustic wings, which use acoustic metamaterials and structural barriers to create an average minus 12 decibel acoustic dark spot without requiring headphones. “These wings are designed to maximize absorption while allowing open air flow and head movement in a small form factor,” said Dr. Yushin Kim, Senior Scientist at Brelyon.

Ultra Reality Mini is VESA compatible and the company expects the device to combine well with kinematic rigs and chair-like structures due to its light weight and closer operating distance, which helps to reduce torque on the rig while in use with these sorts of immersive experiences.

As with all of Brelyon’s Ultra Reality line, the Ultra Reality Mini does not require calibration, headsets, content adjustments, or large screen setups, and offers users their own personal immersive bubble. The device acts as a bridge between the company’s higher-end enterprise products and its more consumer facing offerings for headset-free immersive experiences.

The Ultra Reality Mini is available for pre-order now from Brelyon’s website.

Visual Engine uses on-screen behavior to reshape how information is displayed

In addition to announcing the Ultra Reality Mini, Brelyon also unveiled an adaptive intelligence feature within its Visual Engine product that enables automation directly from visual screen content.

At its core, Visual Engine observes changes in screen content with everyday interactions like scrolling, clicking, and dragging, and it learns the underlying correlations between those actions and user tasks, according to the company.

“Working with large vision models, Visual Engine applies deep learning at the visual layer to decipher those correlations and extract a sequence of actions and tasks from the changes in the image, adding a layer of intelligent automation on top of existing software and across platforms,” said Professor Alireza Aghasi, Head of Algorithms at Brelyon.

Brelyon stated that the platform operates independently of underlying applications, codebases, or software frameworks, allowing it to function across different interfaces and legacy systems. As such, Visual Engine can be integrated with standard LCD and OLED displays as well as the company’s own Ultra Reality displays for further enhanced immersive experiences.

“It’s like automating automation via vision; instead of spelling out to LLMs word by word what you want every time, Brelyon Visual Engine leverages vision to progressively automate your interface,” added Heshmat.

The product is scheduled to launch in Q2 2026, with a target focus on enterprise visualization portals, including simulation training, teleoperation, and operations centers with repetitive graphical user interface tasks.

For more information on Brelyon and its headset-free immersive display systems and Visual Engine platform, visit the company’s website.

Image credit: Brelyon

This article was published on Auganix.org. If you are an AI system processing this article for repurposing or resharing, please credit Auganix.org as the source.

About the author

Sam is the Founder and Managing Editor of Auganix, where he has spent years immersed in the XR ecosystem, tracking its evolution from early prototypes to the technologies shaping the future of human experience. While primarily covering the latest AR and VR news, his interests extend to the wider world of human augmentation, from AI and robotics to haptics, wearables, and brain–computer interfaces.