TRACLabs awarded Phase III SBIR contract for the provision of an Augmented and Mixed Reality training environment for First Responders

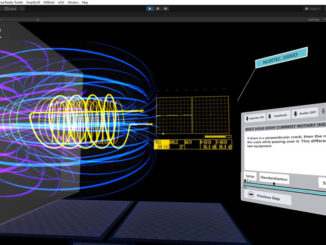

In Augmented Reality and Mixed Reality News September 16, 2020 – TRACLabs, Inc., a provider of extended reality (XR) training solutions, has recently been awarded a Phase III Small Business … continue reading